"How to reach LEVEL 5 in autonomous vehicles, robots, cars etc."

US 6172941 (filing date: Dec. 16, 1999,

granted 2001) see Google Patents

EP 1146406A1 (filing

date: Dec. 03, 1999)

Inventor & Author: Erich Bieramperl, 4040 Linz, Austria - EU

Elapse Time Quantizing

Autoadaption-Theorem

Algorithm of Life

The Neuronal Code

The Meaning of the Tetragrammaton JHWH / YHVH

A

Step to a New Universal Theory?

A method to generate recognition, auto-adaptation and self-organization in autonomous mechanisms and organisms. A number of sensing elements generate analog signals whose amplitudes are classified into different classes of perception intensity. The currently occurring elapse times between phase transitions are recorded and compared with prior recorded elapse times in order to find covariant time sequences and patterns. A motion actuating system can be coupled to the assembly, which is controlled by pulse sequences that have been modulated in accordance with the covariant time sequences. In this way the mechanism or organism in motion is prompted to emulate the found covariant time sequences, while being able to recognize its own motion course and adapting itself to changes of environment.

Background

This invention describes a method for generating processes

that facilitate the self-organization ofautonomous systems. It can

be applied to mechanistic fields as well as to molecular/biological

systems. By means of the invention described herein, it is possible

for a system in motion to recognize external events in a subjective

way through self-observation; to identify the surrounding physical

conditions in real time; to reproduce and to optimize the system's

own motions; and to enable a redundancy-poor process that leads to

self-organization.

Robot systems of the usual static type are

mainly based on deterministic path dependent regulating processes.

The digital outputs and values that control the robot's position are

stored in the memory of a central computer. Many degrees of freedom

can be created by a suitable arrangement of coordinating devices.

Position detectors can be devices such as tacho-generators, encoders,

or barcode rulers scanned by optical sensors that provide path

dependent increment pulses. The activation mostly takes place by

means of stepper motors.

It is also well-known that additional

adaptive regulating processes based on discrete time data are used

in path dependent program control units. These data are produced by

means of the SHANNON-quantization method, utilizing

analog-to-digital converters to sample the amplitudes of sensors and

transducers. They serve to identify the system's actual value (i.e.

its current state). Continued comparison of reference values and

actual values are necessary for correction and adjustment of the

regulating process. Newly calculated parameters are then stored in

the memory. This kind of adaptive regulation is necessary, for

example, in order to eliminate a handling robot's deviations from a

pre-programmed course that are caused by variable load conditions.

If a vehicle that is robot-controlled in this way were to be placed

into an autonomous state, it would generally be impossible to

determine its exact position reference (i.e. coordinates) by means

of tachogenerators or encoders. For this reason controlling values

(or commands) cannot be issued by a computer - or preprogrammed into

a computer - in an accurate manner. This is true not only for

robot-controlled automobiles, gliding vehicles, hovercraft or

aircraft, but also for rail-borne vehicles for which the distance

dependent incremental pulses are often inaccurate and therefore not

reproducible. This is usually caused by an uneven surface or worn or

slipping wheels. Explorer robots, which are used to locate objects

or to rescue human beings from highly inaccessible or dangerous

locations, must therefore be controlled manually, or with computer

supported remote control units. A video communication system is

necessary for such cases in order to be able to monitor the motion

of the robot. However, in many applications of robotics, this is

inadequate. A robot-controlled automobile, for example, should be

capable of avoiding dangerous situations in real time, as well as

being capable of adapting its speed to suit the environment, without

any human intervention. In such cases, it is necessary for the

on-board computer to recognize the situation at hand, then calculate

automatically the next steps to be carried out.

In this way the

robot-controlled vehicle ought to have a certain capability for

self-organization. This is also true for other robot-controlled

systems.

With regards to autonomous robot systems, techniques

already exist to scan the surroundings by means of sensors and to

analyze the digital sensor data that were acquired using the

above-mentioned discrete time quantization method (see Fig. 1); and

there already exist statistical calculation methods and algorithms

that generate suitable regulating parameters. Statistical methods

for handling such regulating systems were described in 1949 by

Norbert WIENER. According to the SHANNON theorem, the scanning of

the external environment must be done with at least double the

frequency of the signal amplitude bandwidth. In this way the

information content remains adequate. In order to be able to

identify the robot's own motions, very high sampling rates are

necessary. This amplitude quantization method currently in

widespread use requires the correlation of particular measurement

data to particular points in time (Ts) that are predetermined using

the program counter. For this reason this should be understood as a

deterministic method. However, practical experience has shown that

even ultrahigh-speed processors and the highest sampling rates

cannot provide sufficient efficiency. The number of redundant data

and the amount of computing operations increase drastically when a

moving sensor-controlled vehicle meets new obstacles or enters new

surroundings at variable speed. Indeed, C. SHANNON's quantization

method does not allow recognition of an analogue signal amplitude in

real time, especially if there are changing physical conditions or

variable motions for which the acquisition of additional information

regarding the instantaneous velocity is necessary. This is also true

if laser detectors or supersonic sensors are used, for which mainly

distance data are acquired and processed.

Therefore, although

this quantization method is suitable for analyzing the trace of a

motion and for representing this motion on a monitor (see Pat.

AT

397 869), it is hardly adequate for recognizing the robot's own

motion, or for reproducing it in a self-adaptive way.Some autonomous

mobile robot systems operate with CCD sensors and OCR software (i.e.

utilising image processing). These deduce contours or objects from

color contrast and brightness differentials, which are interpreted

by the computer as artificial horizons or orientation marks.

Examples of this technology are computer-supported guidance systems

and steering systems that allow vehicles to be guided automatically

by centre lines, side planks, street edges and so on. CCD sensors -

when one observes how they operate - are analog storage devices that

function like well-known bucket brigade devices. Tightly packed

capacitors placed on a MOS silicon semiconductor chip are charged by

the photoelectric effect to a certain electrical potential. Each

charge packet represents an individual picture element, termed

"pixel"; and the charge of each pixel is a record of how bright that

part of the image is. By supplying a pulse frequency, the charges

are shifted from pixel to pixel across the CCD, where they appear at

the edge output as serial analog video signals. In order to process

them in a computer, they must be converted into digital quantities.

This requires a large number of redundant data and calculations;

this is why digital recording of longer image sequences necessitates

an extremely large high speed memory. Recognizing isomorphous

sequences in repetitive motions is only possible with large memory

and time expenditure, which is why robotic systems based on CCD

sensors cannot adequately reproduce their own motion course in a

self-adaptive way. With each repetition of the same motion along the

same route, all regulating parameters must be calculated by means of

picture analysis anew. If environment conditions change through fog,

darkness or snowfall, such systems are overburdened.

Pat. AT 400 028

describes a system for the adaptive regulation of a motor driven

vehicle, in which certain landmarks or signal sources are provided

along the vehicle's route in order to serve as bearing markers that

allow the robot to keep to a schedule. Positions determined by GPS

data can also serve this purpose. When the system passes these

sources, the sensor coupled on board computer acquires the elapsed

times for all covered route segments by means described in

Pat. U.S.

4,245.334, which details the manner of time quantization by first

and second sensor signals. The data acquired in this way serve as a

reference base for the computation of regulating parameters that

control the drive cycles and brake cycles of the vehicle when a

motion repetition happens. The system works with low data

redundancy, corrects itself in a self-adaptive manner, and is

capable of reproducing an electronic route schedule precisely. It is

suitable, for example, for ensuring railway networks keep to

schedule. However, in the system detailed in the above-mentioned

patent, it is not possible to identify external objects and

surroundings.

It is an object of the present invention to provide

an extensive method for the creation of autonomous self-organizing

robot systems or organisms, which enables them to identify external

signals, objects, events, physical conditions or surroundings in

real time by observing from their own subjective view. They will be

able to recognize their own motion patterns and to reproduce and

optimize their behavior in a self-adaptive way. Another object of

this invention is the preparation of an autonomous training robot

for use in sports, that is capable of identifying, reproducing and

optimizing a motion process (e.g. that has been trialed beforehand

by an athlet) as well as: determining the ideal track and speed

courses automatically; keeping to route schedules; representing its

own motion, speeds, lap times, intermediate times and start to

finish times on a monitor; and which is capable of acoustic or

optical output of the acquired data.

Summary Of The Invention

The requirements outlined in the previous paragraph

are solved generically by attaching analog sensors or receptors onto

the moving system (for example, a robot system) which scan

surrounding signal sources whose amplitudes are subdivided by

defining a number of threshold values. This creates perception

zones. The elapsed times of all phase transitions in all zones are

measured by means of analog or digital STQ quantization, and the

frequency of the time pulses is modulated automatically, depending

on the relative instantaneous speed which is determined by the phase

displacement of equi-valent sensors.Therefore the counted time

pulses correlate approximately with the length-values d(nnn). With

this method, the scanning of signal amplitudes is not a

deterministic process: it is not carried out at predetermined times

with predetermined time pulses. The recording, processing and

analysis of the elapsed times takes place according to probabilistic

principles. As a result, a physically significant phenomenon arises:

the parameters describing the external surroundings are not

objectively measured by the system, but are subjectively sensed as

temporal sequences. The system itself functions as observer of the

process. In the technical literature - in the context of

deterministic timing - elapse times are also

called "signal

running times" or "time intervals ". According to the present

invention, the so-called

STQ elapse times in a signal-recognition

process are quantized with every transition of a phase amplitude

through a threshold value (which is effected by starting and

stopping a number of timers). This produces a stream of time data.

Every time elapsed between phase transitions in the "equal zone", as

well as the time elapsed between transitions through a low threshold

value then a higher threshold value (and vice versa), can be

recorded.

STQ(v) = sensitivity/ time quantum of

velocity = Tv1,2,3...

This is the elapsed

time determined by the signal amplitude that occurs when a first

sensor (or receptor). S2 and an equivalent second sensor (or

receptor) S1 moves along a corresponding external signal source Q,

measured from the rising signal edge at the phase transition iTv1.1

of the first sensor signal to the rising signal edge at the phase

transition iTw1.1 of the second sensor signal; and likewise from

iTv2.1 to iTw2.1, from iTv3.1 to iTw3.1. (These transitions

correspond to equivalent threshold values P1,2,3...) STQ(v) times

can also be measured from falling signal edges. They serve as

parameters for the immediate relative velocity (vm) of the system in

motion.

STQ(d) =

sensitivity/time quantum of differentiation = Td1,2,3...

This is the elapsed time determined by the signal amplitude of a

sensor (or receptor) S within range of a corresponding external

signal source (Q1,2,3..), measured from the rising signal edge at

the phase transition iTw1 of a rising amplitude trace to the rising

signal edge at the next higher phase transition iTw2, and from the

rising edge at iTw2 to the rising edge at iTw3, from the rising edge

at iTw3 to the rising edge at iTw4, and so on; or, equivalently,

from successive falling edges when amplitude traces are falling.

(These transitions correspond to the equivalent threshold values

P1,2,3,4..) STQ(d) elapse times are differentiation parameters for

the slope of signal amplitudes (and consequently for their

frequency); furthermore they serve as a plausibility check and

verification of other corresponding STQ data. With this measurement,

the relative motion between sensor and signal source is not taken

into account. In the case of no relative motion between sensors and

sources, changes in the source field are detectable and recognizable

by recording STQ(i) and/or STQ(d) data. If the source field is

invariant, a recognition is only possible if STQ(i) or STQ(v)- data

are derived from variable threshold values (focusing). If there is

absolute physical invariance, no STQ-quantum can be acquired, and

recognition is impossible. STQ(v)-data are recorded in order to

recognize the spatial surroundings under relative motion, and/or to

identify relative motion processes so as to be able to recognize

the self-motion (or components of this motion); as well as to

reproduce any motion in a self-adaptive manner.

If the method

presently being described is applied in a mechanistic area, the

above-mentioned perception area zones may normally be set by a

number of electronic threshold value detectors with pre-definable

threshold levels, and the STQ(i) and STQ(d) elapse time data are

acquired by programmable digital timers. The elapse timing process

is actuated at an iT phase transition as well as halted at an iT

phase transition. Then the time data are stored in memory.

Moreover, these STQ(v) elapse times are recorded by means of

electronic integrators, in which the charge times of the capacitors

determine those potentials that are applied as analog STQ(v) data to

voltage/frequency converters, in order to modulate the digital time

pulse frequencies for the adaptive measurement of STQ(i) and STQ(d)

data, in a manner which is a function of the relative speed vm.

In non-mechanistic implementations of the method presently being

described, it is intended that the so-called perception area zones,

as well as the threshold value detectors and the previously

described STQ-quantization processes, are not formed in the same

manner as in electronic analog/digital circuits, but in a manner

akin to molecular/biological structures. In other general

implementations, it is intended that those time stream patterns that

consist of currently recorded STQ data be continuously compared with

prior recorded time stream patterns by means of real time analysis,

in order to identify external events or changes in physical

surroundings with a minimum of redundancy, as well as to recognize

these in real time.

In yet another possible general

implementation, it is intended that autonomously moving systems,

that are equipped with sensors and facilities capable of the kind of

time stream pattern recognition mentioned above, have propulsion,

steering and brake mechanisms that are regulated in such a manner,

that the autonomously moving system (in particular, a mobile robot

system) is capable of reproducing prior recorded STQ time stream

patterns in a self-adaptive way. When repeating this movement, a

processor deletes unstable or insufficiently co-ordinated time

stream data from memory, while assigning only those time stream data

as instruction, which allows reproduction of the motion along the

same routes in an optimal co-ordinated manner.

In addition, it is

intended that the time base frequency for the above mentioned STQ

elapse timing is increased or decreased in order to scale the time

sequences proportionally, whereby the velocity of all movements is

proportionally scaled too.

Short Description Of The Figures

Fig. 1 shows a diagram of SHANNON's

deterministic method of discrete time quantization of signal

amplitude traces.

Figs. 2a-c are graphic

diagrams of the quantization of signal amplitude traces by means of

acquisition of STQ(v), STQ(i) and STQ(d) elapse times, according to

the herein described non-deterministic method.

Figs.

3a-c illustrate this non-deterministic quantization method

in connection with serial transfer of acquired STQ(d)- elapse times,

as well as time pulse frequency modulation of simultaneously

acquired parameters of the immediate relative speed (vm).

Figs. 3d-g illustrate, in accordance with the

presently described invention, a method to compare the currently

acquired STQ time data sequences with prior recorded STQ time data

sequences, in order to detect isomorphism of certain time stream

patterns.

Fig. 4a shows an action potential

AP

Fig. 4b shows vm dependent action

potentials which propagate from a sensory neuron (receptor) along a

neural membrane to the synapse where the covariance of STQ sequences

is analysed.

Fig. 4c shows a number of vm

dependent action potentials, which propagate from a group of

suitable receptors along collateral neural membranes to synapses, at

which the "temporal and spatial facilitation" of AP's is analysed

together with the covariances of these STQ sequences in order to

recognize a complex perception.

Fig. 4d

shows a postsynaptic neuron that produces potentials with inhibitory

effects.

Fig. 4e and Fig. 4f

show the general function of the synaptic transfer of

molecular/biologically recorded STQ information to other neurons or

neuronal branches.

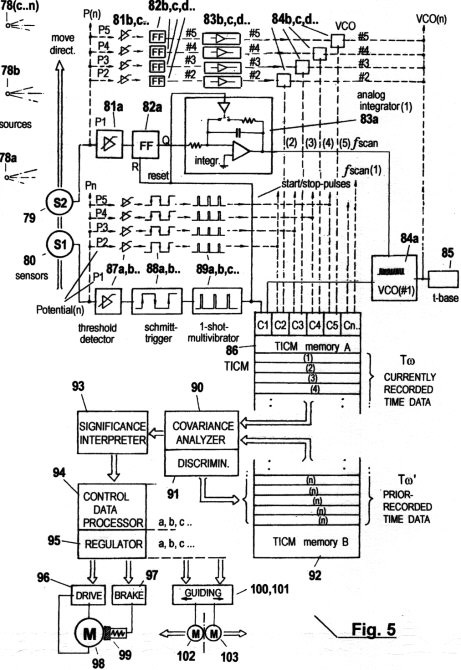

Fig. 5 shows a

configuration where the described invented method has been applied

to generate an autonomous self-organizing mechanism, and where the

STQ time data are acquired by means of electronics.

Fig. 6a shows a configuration of a concrete embodiment of

the present method, where (as in Figs. 2a - 2c) the acquired STQ(v),

STQ(i) and STQ(d) time data are applied to the recognition of

certain spatial profiles, structures or objects when the system is

in motion at arbitrary speed.

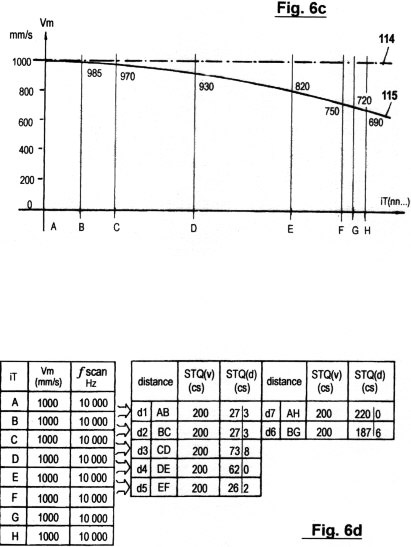

Figs. 6b-e

illustrate several diagrams and schedules in accordance with the

particular embodiment in Fig. 6a, in which the sensory scanning and

recognition of certain profiles can occur under invariable or

variable speed course conditions.

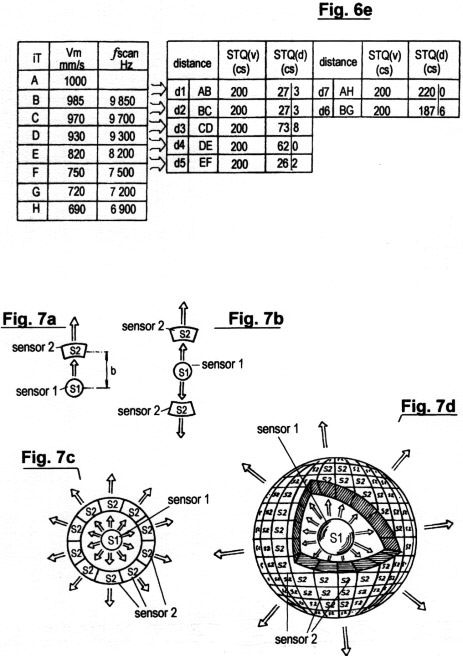

Figs. 7a-d

show several configurations of sensors and sensor structures for the

recording of STQ(v) elapse times, which serve as parameters of the

immediate relative velocity vm.

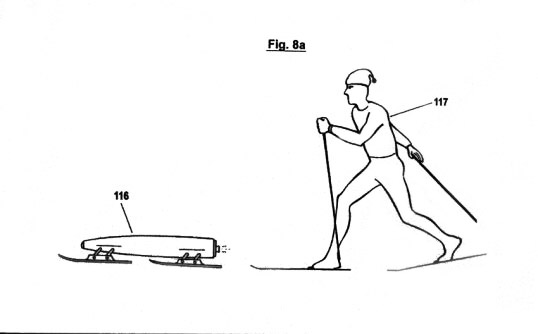

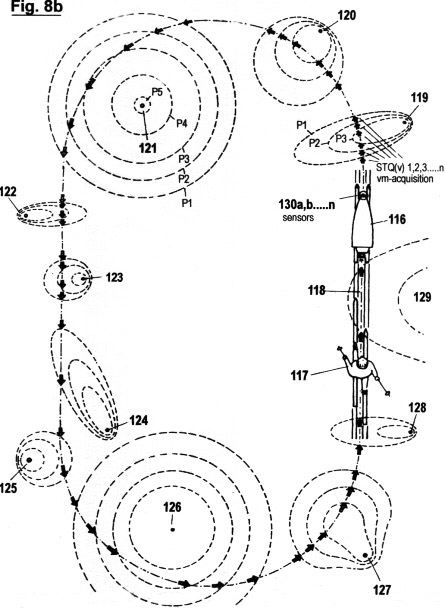

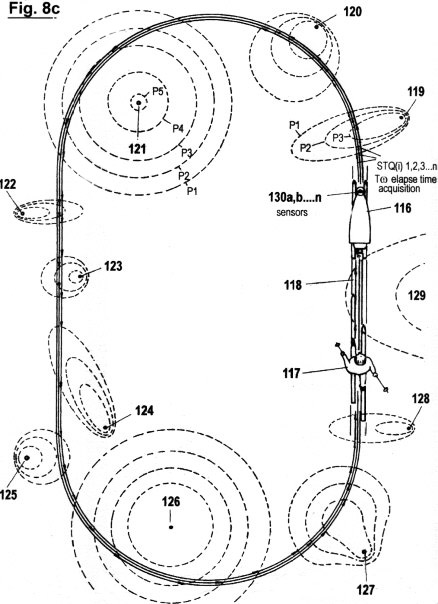

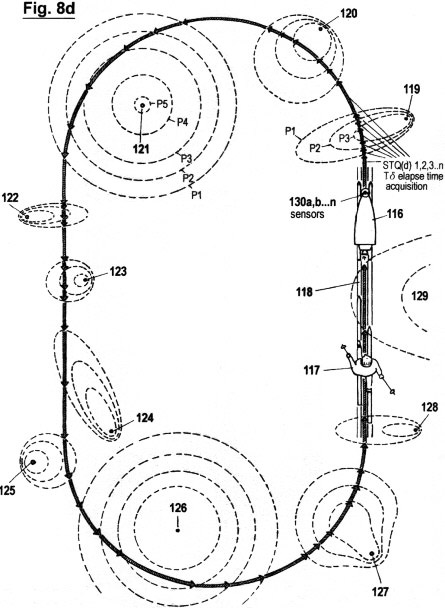

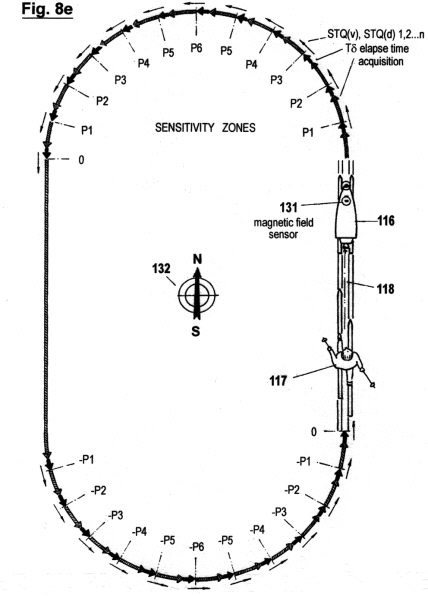

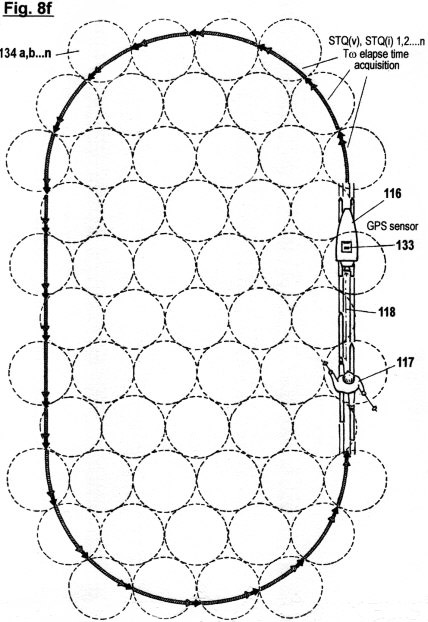

Figs. 8a-f

illustrate a configuration, as well as the principles under which

another embodiment of the invention functions, in which the

acquisition of STQ time data (see Figs. 2a - 2c) is used to create

an autonomous self-adaptive and self-organizing training robot for

use in sport. This embodiment is capable of reproducing and

optimizing motion processes that have been pre-exercised by the

user. It is also capable of determining the ideal track and speed

courses automatically; of keeping distances and times; of

recognizing and warning in advance of dangerous impending

situations; and of representing on a monitor the self-motion, in

particular the speed, lap times, intermediate times, start to finish

times and other relevant data. In additional, this embodiment is

capable of displaying these acquired data in an optical or acoustic

manner.

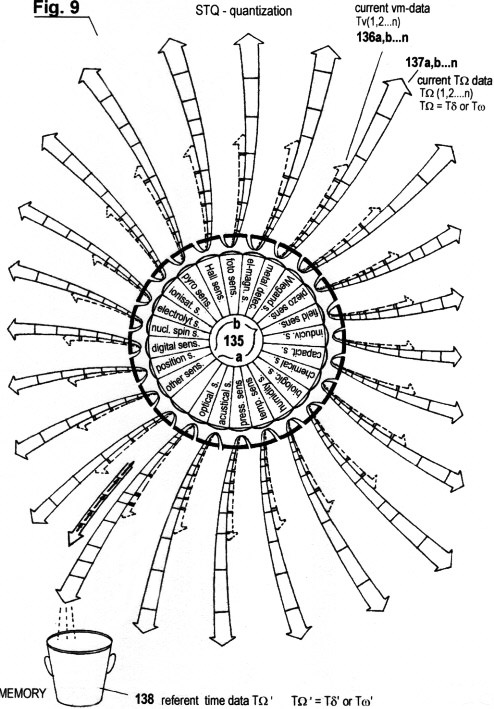

Fig. 9 shows a schematic diagram of

the automatic focusing of certain perception zones or threshold

values, through which it is intended to improve and optimize the

recognition capability and the auto-covariant behaviour of the

system in motion. (This point is object of an additional patent

application).

Fig. 10 illustrates in a

general schematic view the production of time data streams by

amplitude transitions at certain sensory perception areas or

sensitivity zones (or threshold values, respectively) in autonomous

self-adaptive and self-organizing structures, organisms or

mechanistic robot systems, where a multiplicity of types of sensors

or receptors can exist.

Detailed Description Of The Invention

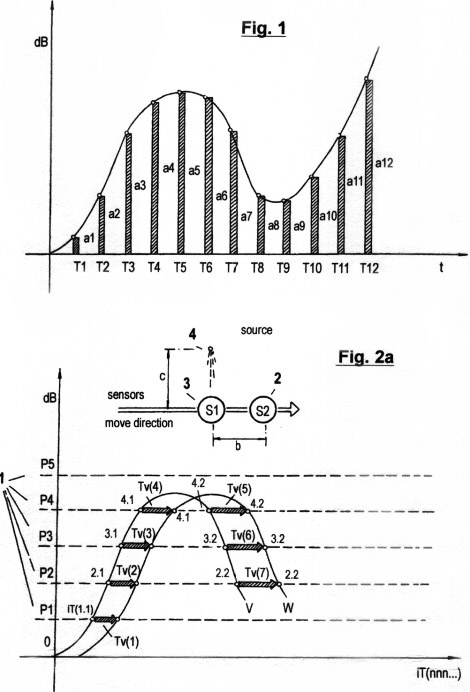

Fig. 1 shows a diagram of SHANNON's deterministic method of discrete time quantization of signal amplitude traces, which are digitized by analog/digital converters. In the usual technical language this method is called "sampling". This deterministic quantization method is characterized by quantized data (a1,a2,a3 ...an) which correlate to certain points in time (T1,T2,T3, ...Tn) that are predetermined from the program counter of a processor.

In present day robotics practice, this currently used deterministic method requires very fast processors, high sampling rates and highly redundant calculations for the processing and evaluation of data. If one wants to acquire sensor data from signal amplitudes of external sources for the purpose of getting information about the spatial surroundings of a system in which a sensor coupled processor is installed, SHANNON's method is incapable of generating suitable data for the immediate relative speed and temporal allocation, data which are necessary to optimize the coordination of the relative self-motion. A recognition of its own motion in real time therefore is not possible. For this reason, this currently used deterministic method is inadequate for the generation of highly effective autonomous robot systems.

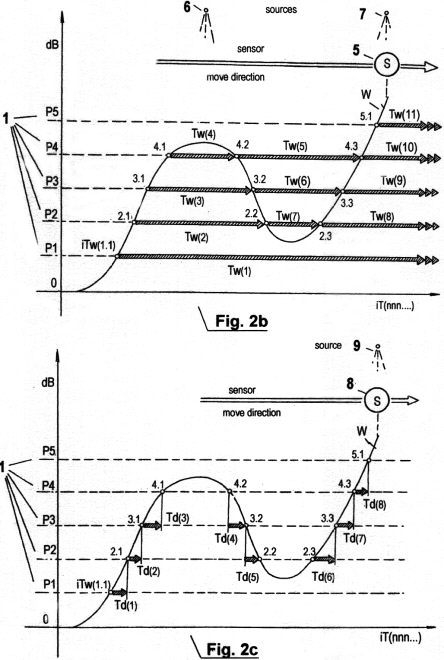

Figs. 2a - c show three different graphs of direct "sensory quantization" of signal amplitude traces by means of the herein described invented method. In contrast to the quantization method shown in Fig. 1, in this method no vertical segments of amplitude traces are scanned; there are only elapse time measurements carried out in three different complementary ways. As is easy seen, it is necessary to predefine certain numbers of threshold values 1 (P1, P2, ...Pn) in order to provide different sensory perception zones. Each residence time within a zone and time interval between zones is recorded, as well as the elapse time between the transition from a lower to a higher threshold value and vice versa.

Fig. 2a shows the first of these three types of sensory time quantization. It is designated STQ(v) elapse time (i.e. sensitivity/time quantum of velocity), and produces a parameter for the relative moment speed vm. It can also be understood as the time duration between the phase transitions of two parallel signal traces at the same threshold value potential. That is similar to the standard term "phase shift". In the graph, the measured STQ(v) elapse times are designated with Tv(n). The phase transitions at the amplitude trace V, which is produced when the sensor (or receptor) 2 passes along a corresponding external signal source 4, are designated iTv(n.n); the phase transitions at the amplitude trace W, which are produced when the sensor (or receptor) 3 passes along the same signal source, are designated with iTw(n,n). In the ideal case, the sensors 3, 4 are close together compared to the distance c between external signal source and sensors, c remains approximately constant, and both sensors (or receptors) display identical properties and provide an analogue signal; then two amplitude traces V and W are produced at the outputs of the mentioned sensors (the sensor amplifiers or receptors, respectively) which are approximately congruent. (Deviations from ideal conditions are compensated by an autonomous adaptation of the sensory system in a continuously improved way, which is described later). When sensor 2, in the designated direction, moves along the signal source 4, then the signal amplitude V passes through the predefined threshold potential P1 at phase transition iTv(1.1). The rising signal edge actuates a first timer that records the first STQ(v) elapse time Tv(1). The continually rising signal amplitude V passes through the threshold potentials P2, P3 and P4; the phase transition of each of these activates further timers used for recording of further elapse times Tv(2), Tv(3) and Tv(4). Meanwhile, sensor 3 has approached signal source 4 and produces the signal amplitude trace W. When W passes through the threshold potential P1 at the phase transition iTw(1.1), the rising signal edge stops the timer, and the first STQ(v) elapse time is recorded and stored. The same procedure is repeated for the elapse times Tv(2), Tv(3) and Tv(4), when the signal amplitude passes through the next higher threshold values P2, P3 and P4. If V begins to fall, it first passes through the threshold value P4 on the falling shoulder of the amplitude trace. Now, the falling signal edge activates a timer that records the next elapse time Tv(5). At the further phase transitions iTv(3.2) and iTv(2.2), where the threshold values P3 and P2 are passed downwards, there are also timers which are actuated when the signal edges fall, in order to measure the elapse times Tv(6), Tv(7). If the signal amplitude V rises again, the STQ(v) parameters are recorded by the rising signal edges again. The same procedure is applied to stopping the timers at the phase transitions of the signal amplitude W. This produces the time displacement.

Fig. 2b shows another type of sensory STQ

quantization. It is called STQ(i) elapse time (i.e. sensitivity/time

quantum of interarrival). Simply, it is the time Tw a mobile system

needs for a relative length. It can also be understood as the time

duration between phase transitions of a signal trace at same

threshold potentials. If the time counting frequencies corresponding

to the relative speed parameters Tv, (i.e., the STQ(v) elapse times)

are proportionally accelerated or decelerated, the recorded

modulated time pulses correlate with the relative lengths. With

absolute physical invariance between the sensor and the signal

sources (i.e., synchronism), no STQ(v) parameter can be acquired,

but if an equivalent signal intensity is changing, STQ(v) data are

even obtainable when there is no relative motion. Therefore, during

motion, these data are necessary not only for recording variable

signals, but also for scanning spatial surroundings. In this figure,

measured STQ(i) elapse times are designated with Tw(n). The phase

transitions, which are produced by the amplitude trace W when the

sensor (or receptor) 5 is moving along the

corresponding adjacent signal sources 6 and

7, are designated with iTw(n.n). As soon as the sensor (or

receptor) 5 passes in the marked direction along the signal source

6, the signal amplitude W goes through the

pre-defined threshold potential P1 at phase transition iTw(1.1). The

rising signal edge activates a first timer for the recording of the

first STQ(i) elapse time Tw(1). Thereafter, the continually rising

signal amplitude W passes through the pre-defined threshold

potentials P2, P3 and P4, and when these show a phase transition,

further timers are activated in order to record further elapse times

Tw(2), Tw(3) and Tw(4). Meanwhile, sensor 5 begins

to move away from the vicinity of the signal source 6. The falling

amplitude trace passes through the threshold potential P4, and upon

the phase transition iTw(4.2) the falling signal edge stops the

timer that was recording the STQ(i) elapse time Tw(4).

Simultaneously, the same falling signal edge activates another timer

which measures the elapsed time Tw(5) up to the arrival of the next

rising signal edge. But this signal edge rises when sensor 5

passes along the equivalent signal source 7.

However, previously, the signal amplitude falls under the threshold

values P3 and P2, and when these show the phase transitions iTw(3.2)

and iTw(2.2), the timers recording the STQ(i) elapse times Tw(3) and

Tw(2) are stopped. Simultaneously, additional timers recording the

elapse times Tw(6) and Tw(7) are activated. They stop again at the

phase transitions iT(2.3), iTw(3.3), iTw(4.3) and iTw(5.1), when the

signal amplitude goes upwards again (but not before the sensor

motion along signal source 7 starts). After those phase transitions,

new timers start recording the next elapse times Tw(8), Tw(9),

Tw(10), Tw(11), and so on

Fig. 2c shows a third type of sensory STQ

quantization that is completely different to those of Figs. 2a and

2b. It is termed STQ(d) elapse time (i.e., sensitivity/time quantity

of differentiation); and it can be understood as the time duration

Td, measured between a first phase transition at a first predefined

threshold potential up to the next phase transition at the next

threshold potential, which can be either higher or lower than the

first one. STQ(d) elapse times are parameters for the slope of

signal amplitude traces, and consequently they are parameters for

their frequency. By fast comparison of STQ(d) elapse times, signal

courses can be recognized in real time; therefore, for the creation

of intelligent behavior, STQ(d) quanta are just as imperative as

STQ(v) quanta and STQ(i) quanta. The quantization of STQ(d)-elapse

times is possible under all variable physical states and arbitrary

relative motion between sensor and external sources, in which STQ(v)

and STQ(i) elapse times are also quantizable. If the STQ(d) elapse

times are acquired cumulatively and serially, then they can be used

in the verification and plausibility examination of STQ(i) elapse

times (which are likewise acquired). In the graph, the measured

STQ(d) elapse times are designated with Td(n). The phase transitions

which are produced by the amplitude trace W when the sensor (or

receptor) 8 is in the field of a corresponding signal source 9, are

designated with iTw(n.n). When sensor 8 moves along the

corresponding signal-source 9 in the direction shown, at first the

signal amplitude W passes through the pre-defined threshold value P1

at the phase-transition iTw(1.1). Of course, this also happens when

the field of this signal source is active and/or variable, although

the sensor and the corresponding signal source are in an invariant

opposite position. The rising signal edge activates a first timer

that records the first STQ(d) elapse time Td(1). When the rising

amplitude trace W passes through the next higher threshold value P2

at the phase transition iTw(2.1), this timer is stopped and the

measured STQ(d) elapse time Td(1) is stored. Simultaneously, the

next timer is activated, and records the elapse time up to the next

phase transition at iTw(3.1), upon which it is stopped; then the

next timer is activated up to the next transition iTw(4.1), upon

which it is stopped again, and so on. (All the measured elapse times

are stored in memory). At the phase transition iTw(4.1) the next

timer is activated by threshold potential P4. However, since the

amplitude trace does not reach the next higher threshold value

before falling to P4 again, no STQ(d) can be acquired with the last

timer. Thus in this position only the quantization of STQ(i) elapse

times, as described in Fig. 2b, can take place. The next STQ(d)

elapse time Td(4) can only be acquired when the signal amplitude

falls below the threshold value P4 at the transition iTw(4.2), upon

which the next timer is activated, and stopped when the phase

transition at the next lower threshold value P3 occurs.

Simultaneously, the next timer is activated, and so on.

In

mechanistic applications, where the analysis of signal amplitudes

requires the quantization of STQ(d) elapse times, STQ(d) data are

often acquired in combination with STQ(i) data. If it is intended to

use this quantization method to enable a robot to recognize its own

motion from a subjective view (by detecting and scanning the spatial

surroundings when one moves along external signal sources), then

STQ(v) and STQ(i) data are predominantly acquired. However, if the

main intention is to recognize external, non-static optical or

acoustic sources such as objects, pictures, music or conversations

etc., then the proportion of STQ(d) parameters increases, while the

proportion of STQ(v) parameters decreases. In the case of physical

invariance (i.e. when there is no relative motion) no speed

parameters can be derived from any sensor signals, and only STQ(d)

and STQ(i) elapse times are quantized.

Figs. 3 a - c

illustrate an important aspect of the performance of the present

method, in connection with serial transfer of acquired STQ(d) elapse

times, as well as in connection with time pulse frequency modulation

in relation to simultaneously acquired STQ(v) parameters which

represent the instantaneous relative speed (vm). However, this

instantiation of the method is only suitable where mainly STQ(d)

elapse times are measured, together with those STQ(i) elapse times

(see also Fig. 2c) which are produced at the phase transitions when

maximal threshold value near the maximum of the amplitude are

reached, or when the minimal threshold value near the minimum of the

amplitude is reached. In this case, all measured elapse times can be

represented as serial data sequences. But if each phase transition

at each threshold potential generates STQ(d) elapse times as well as

STQ(i) elapse times (see also the notes for Fig. 5), then these data

are produced in parallel, and therefore they have to be processed in

parallel.

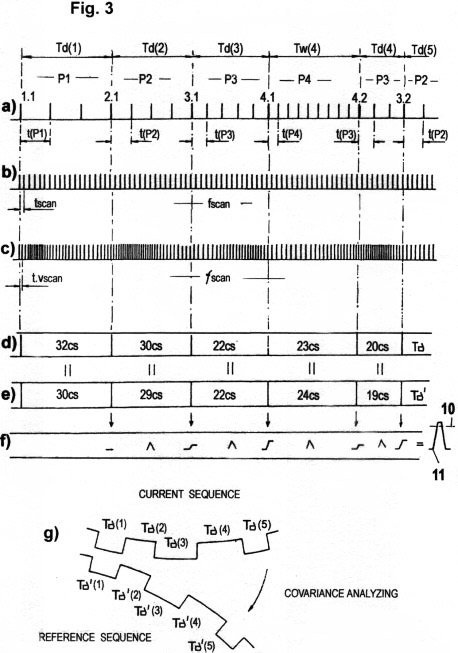

Fig. 3a shows how a simple serial pulse sequence can be sufficient for data transport of acquired STQ(d) elapse times, if the threshold potentials P1, P2, P3... that define the phase transitions 1.1, 2.1, 3.1... from which the STQ elapse times are derived, are "marked" either by codes or by certain characteristic frequencies. In this figure, these "markers" are pulses with period t(P1), t(P2), t(P3)... and frequencies f(P1), f(P2), f(P3).... These are modulated according to the respective threshold potentials. These identification pulses (IP) serve to identify the pre-defined threshold values P1, P2, P3...., (or the perception zones 1, 2, 3..., respectively). Only these identification pulses, in cooperation with invariable time counting pulses (ITCP) with the period tscan, or in cooperation with variable (vm modulated) time counting pulses (VTCP) with the period t.vscan (see also Figs. 3b, 3c), enable the actual acquisition of the STQ(d) elapse times Td(1), Td(2), Td(3), Td(4),... (or, respectively, the STQ(i) elapse times Tw(1), Tw(2), Tw(3), Tw(4),.... that are produced at amplitude maxima or minima), as we have already described. Variable VTCP pulses with the period t.vscan, which are automatically modulated relative to the acquired STQ(v) parameters (i.e., the instantaneous moment speed vm), are used to scan the signal amplitudes that are derived from external sources, in a manner proportional to speed. This reduces the redundancy of the calculation processes considerably (see also Fig. 3c). The STQ(d) elapse times that are acquired in such a vm-adapted manner by VTCP pulses are designated with Tδ; the STQ(i) elapse times, acquired in the same manner, are designated with Tω 1,2,3...).

Fig. 3b shows the

measurement of STQ(d) elapse times with invariant ITCP pulses with

period tscan and constant frequency fscan. This takes place as long

as no STQ(v) parameter is acquired, e.g. when no relative motion is

present between sensor and signal sources, and therefore when can be

measured.

Fig. 3c shows the

measurement of STQ elapse times with modulated VTCP pulses. These

counting pulses depend on the instantaneous relative speed vm (or on

the acquired STQ(v) parameter, respectively) as well as their period

t.vscan and frequency ƒscan in a manner that is proportion to vm. If

vm is very small or tends to zero, then the counting frequency ƒscan

is likewise reduced to the minimum frequency fscan (as seen in Fig.

3b). As shown in Fig. 2a, each STQ(v) parameter is acquired by means

of a second adequate "front" sensor (or receptor). Vm is thus

already recorded even before the actual STQ(d) and/or STQ(i) elapse

time measurement. Therefore it is possible automatically to modulate

ƒscan for the measurement of Tδ data according to the acquired

STQ(v) parameters, in order to reduce the number of t.vcalculations

as well as to minimize memory requirements. Thus, a largely

redundancy-free analysis results.

Although the time impulses

counted with this method are approximately covariant with the

covered lengths (d), it can be proved that they nevertheless

represent modified time data, and not distance data. As with the

origin of those data, the further processing and analysis of such

modified STQ elapse times Tδ (n) is dependent on probabilistic

principles. The time data T δ (n) are effectively "subjectively

sensed".

In mechanistic systems the modulation of time counting

frequencies in a manner proportional to distance traveled is done

chiefly by means of programmable oscillators and timers, as

illustrated in Fig. 5. However, in complex structured

biological/chemical organisms, this self-adaptive process (a part of

the so-called "autonomous adaptation") is generated mainly by

proportional >alteration of the propagation speed of timing pulses

in neural fibers, as shown in Figs. 4a -d. However, autonomous

adaptation and self-adaptive time base-altering processes of the

type described can also be formed differently. They can exist on

molecular, atomic or subatomic length scales. The author names this

principle "temporal auto-adaptation".

Figs. 3d - g show the

conceptual basis for the comparison of currently acquired STQ time

data sequences with prior recorded STQ time data sequences, as

well as their statistics-based analysis. The vm-modulated time

data Tδ (n), shown in Fig. 3d having the sequence 32 30 22 23 20 (cs

= cycles), are compared datum by datum with prior recorded time

data Tδ' (n), having the sequence 30 29 22 24 19, which were

likewise recorded in a vm-modulated manner. The comparison process

is actually a covariance analysis. When the regression curves of

both time data patterns converge, covariance exists. For these

purposes, in mechanistic systems, coincidence measurement devices,

comparator circuits, software for statistical analysis methods

or "fuzzy logic" can be used. The probability density

parameters are added up, and as soon as the total value within a

certain period exceeds a pre-defined threshold 10, then a signal

11 is produced that indicates that the sequence was "recognized".

This signal predominantly serves to regulate adaptively the

actuators in mechanistic systems (or motor behavior in organisms,

respectively). Moreover, the signal shows that "autonomous

adaptation" has taken place prior to these time data patterns being

recorded. In respect of the motoric behavior of any mechanistic or

biological organism, it is true that recognition of signal

sequences goes hand in hand with automatic adaptation (or

"autonomous adaptation", respectively). This principle is hereby

termed "motoric auto-adaptation" or "auto-emulation".

Fig. 3g shows this auto-adaptation process in a

schematic and easily comprehensible manner. A currently acquired

T time data sequence is continually compared with prior recorded

Ttime data sequences, and if approximate covariance appears, then

the sequences fit like a key into a lock. As described in the

following sections, this process produces a type of "bootstrapping"

or "motoric emulation, which constitutes a basic characteristic

of redundancy-free autonomous self-organizing systems and

organisms. Admittedly, the covariance analysis of two time data

patterns in mechanistic/ electronic systems is relatively

complicated (see also Fig.5). But this is not so in

molecular/biological organisms and other systems. In such systems,

this "bootstrapping" appears as a so-called "synergetic effect",

which is approximately comparable with rolling a number of

billiard balls into holes arranged in some pattern. (The name

"synergetic" was first used by H. HAKEN in the year 1970.)

Successful potting is determined by speed and direction. If the

speed and direction are altered, no potting will takeplace. An

attempt can also fail if the positions of the holes was somehow

changed whilst the initial positions of the balls were kept

constant, even if their speed and direction were covariant with the

original speed and direction (and when the covariance does not

adequately take into account the changing pattern). In a similar

way, a current STQ time data sequence, acquired by an autonomous

self-organizing system, produces a characteristic fingerprint

pattern, and whenever a previously recorded reference pattern is

detected that is isomorphic to the currently recorded pattern, then

auto-adaptation and auto-emulation results. This phenomenon is

inherent in all life forms, organisms and elementary structures

as a teleological principle. If no covariant reference pattern is

found, the auto-adaptive regulating collapses and the system

behaves chaotically. This motion changes from chaotic back to

ordered as soon as currently recorded STQ time patterns begin to

converge to prior recorded STQ time patterns that the analyzer

finds to be covariant.

Figs. 4a - d

illustrate a model for the acquisition and processing of STQ(d) and

STQ(v) elapse times (see also Figs. 3a-g) and for temporal and

motoric auto-adaptation in a molecular/biological context. The

basic elements of the model have already been described in the

neurophysiology literature by KATZ,GRAY, KELLY, REDMAN, J. ECCLES

and others. The present invention is of special originality

because temporal and motoric auto-adaptation is effected here by

means of STQ quanta, which are described for the first time here.

Such systems consist mainly of numerous neurons (nerve cells).

The neurons are interconnected with receptors (sensory neurons)

which enables the recording and recognition of the neurons' physical

surroundings. In addition, the neurons cooperate with effectors

(e.g.muscles) which serve as command executors for the motoric

activity. The expression "receptor" or "sensory neuron" corresponds

to the mechanistic term "sensor". An "effector" is the same as an

"actuator", which is a known term in the cybernetics literature.

Each neuron consists of a cell membrane that encloses the cell

contents and the cell nucleus. Varying numbers of branches from

the neurons (axons, dendrites etc.) process information off to

effectors or other neurons. The junction of a dendritic or axional

ending with another cell is called a synapse. The neurons

themselves can be understood as complex biomolecular sensors and

time pulse generators; the synapses are time data analyzers which

continually compare the currently recorded elapse time sequences

with prior recorded elapse time patterns that were produced by the

sensory neurons and were propagated along nerve fibers towards the

synapses. In turn, a type of "covariance analysis" is carried out

there, and adequate probability density signals are generated that

propagate to other neighboring neural systems or to effectors.

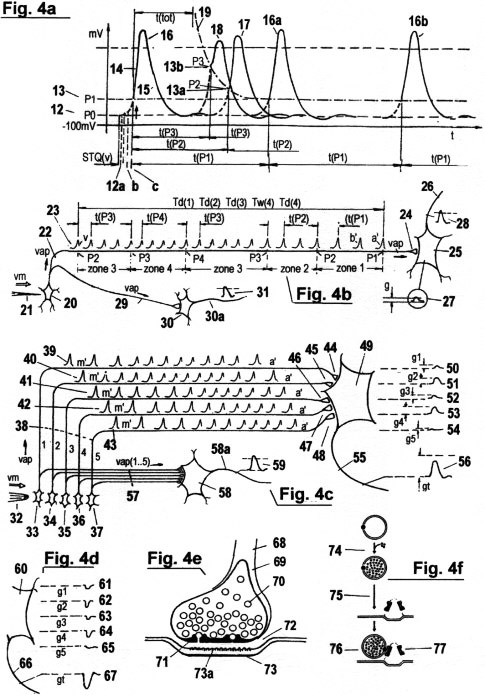

Fig. 4a shows a so-called "action potential" AP that is produced at the cell membrane by an abrupt alteration of the distribution of sodium and potassium ions in the intra and extra-cellular solution, which works like a capacitor. These ionic concentrations keep a certain balance as long as no stimulus is produced by the receptor cell. In this equilibrium state, a constant negative potential 12, termed the "rest potential", exists at the cell membrane. As soon as a receptor perceives a stimulus from an externalsignal source, Na+ ions flow into the neutral cell, which causes the distribution of positive and negative ions to be suddenly inverted, and the cell membrane " depolarizes". Depending on the intensity of the receptor stimulus, several effects are produced:

(a) If the threshold P1 is not exceeded, then a

so-called "electrotonic potential" EP is produced which propagates

passively along the cell membrane (or axon fiber), and which

decreases exponentially with respect to time and distance traveled.

The production of EP is akin to igniting an empty fuse cord. The

flame will stretch itself along the fuse, becoming weaker as it goes

along, before finally going out. EP's originate with each

stimulation of a neuron.

(b) If the threshold P1 is

exceeded, then an "action potential" AP (as in Fig. 4a) is produced

which propagates actively along the cell membrane (or axon fiber)

with a constant amplitude in a self-regenerating manner. The

production of AP is akin to a spark incident at a blasting fuse: the

fiercely burning powder heats neighboring parts of the fuse, causing

the powder there to burn, and so on, thus propagating the flame

along the fuse.

AP's are used in the quantization of STQ(d) and STQ(v) elapse times. They are practically equivalent to identification pulses IP with periods t(P1), t(P2), t(Pn)..., which are shown in Fig. 3a. AP's signal the occurrence of the phase transitions from which STQ(d) and STQ(v) elapse times derive. In addition,the AP' indirectly activate the molecular/biological "timers" that are used for recording these elapse times. But AP's do not represent deterministic sampling rates for amplitude scanning; and they do not correspond to electronic voltage/frequency converters. Moreover, their amplitude is independent of the stimulation intensity at the receptor, and they do not represent the time counting pulses used in the measurement of elapse times. Rather, the recording of STQ elapse times is effected and modulated by the velocity with which the action potentials propagate along the nerve fibers (axons) and membrane regions.

The time measuring properties of AP's are

described in detail in the following section:

If an EP, in answer

to a receptor stimulus, exceeds a certain threshold value (P1)

13, then an AP is triggered. The amplitude trace of

an AP begins with the upstroke 14 and ends with the

repolarisation 15, or with the so-called

"refractory period", respectively. At the end of this process, the

membrane potential decreases again to the resting potential P0, and

the ionic distribution returns to equilibrium. Not each receptor

stimulus generates sufficient electric conductivity to produce an

AP. As long as it remains under a minimal threshold value P1, it

generates only the electrotonic potential EP (introduced above).

(For a better understanding of elapse time measurements in

biological/chemical structures, see Fig. 2c and Fig. 3a). The first

AP, which is triggered after a receptor is stimulated, generates

initially (indirectly) the impulse that activates the first timer

that records the first STQ(d) elapse time, when the signal amplitude

W passes through the threshold value of the potential P1 at phase

transition iTw(1.1). This signal represents simultaneously an

identification pulse IP. The first AP corresponds to the first IP in

a sequence of IP's that represents the respective threshold value

status or perception zone in which the stimulation amplitudes were

just found. As long as the stimulus at the receptor persists, an AP

16a, 16b... is triggered in temporal intervals

whose duration depends on the respective thresholds in which the

stimulus intensities have just been found.

These temporal

intervals correspond to those IP periods t(P1), t(P2),... that are

required for serial allocation and processing of STQ elapse times

(see Fig. 3a). The AP frequency is stabilised through the so-called

"relative refractory period" (i.e. downtime) after each AP, during

which no new depolarisation is possible. Because the relative

refractory period shortens itself adaptively in proportion to the

increase in stimulation intensity at the receptor (e.g. if the EP

reaches a higher threshold value P2 (or perception zone) 13a), there

is a similarity here with "programmable bi-stable multivibrators"

found in the usual mechanistic electronics. The downtime (refractory

period) after an AP is shown as the divided line 19.

Fig. 4a illustrates an "absolute refractory period" t(tot) following a repolarisation. No new AP can be created during this time, irrespective of the stimulation intensity at the receptor rises. The maximum magnitude of a recognizable receptor stimulus is programmed in this way. Of importance is the fact that both the duration of the relative refractory period as well as character of the absolute refractory period are subordinate to auto-adaptive regularities, and are therefore continually adapting to newly appearing conditions in the organism. Consequently, the threshold values P0, P1, P2.... from which STQ quanta are derived are themselves not absolute values, but are subject to adaptive alteration like all other parameters; including, in particular, the physical "time".

We shall now elaborate upon what happens after the first STQ(d)

elapse time at P1 is recorded via the first AP: If the stimulation

intensity (with a theoretical amplitude W) increases from the lower

threshold P1 to the next higher threshold P2, then the following AP

triggers indirectly the recording of the second STQ(d) elapse time

as soon as a phase transition occurs through the next higher

threshold P2. The same process is repeated in turn for the threshold

values P3, P4, ... and so on. In each case, the AP functions

simultaneously as an identification pulse IP, as described in Fig.

3a. It therefore recurs in threshold-dependent periods as long as a

perception acts upon the receptor (i.e. for as long as the receptor

is perceiving something).

As an example, consider also Fig.

3a: As long as the stimulation intensity remains in the zone P2, the

AP 17, 17a, 17b.... recurs in short temporal

periods. These periods (or intervals) are similar to those periods

of IP identification pulses (with period t(P2)) that are required

for serial recording of the STQ elapse times Td(2) and Tw(2). When

the increasing stimulation intensity reaches the threshold value P3

(or perception zone 3) 13b, the AP's recur in even shorter time

periods 18a, 18b, 18c... This corresponds to the IP identification

pulses with the period t(P3), shown in the figure, which are

indirectly required for serial timing of the STQ elapse times Td(3)

and Tw(3). An even larger stimulation intensity, for example in P4

(perception zone 4), would generate an even shorter period for the

AP's. This would correspond approximately to t(P4) in Fig. 3a. The

maximum possible AP pulse frequency is determined by t(tot). Shorter

refractory periods, after the depolarization of APs, also produce

smaller AP-amplitudes. This property simplifies the allocation of

AP's in addition. In the following, the generation of the actual

time counting pulses for STQ quantization is detailed. These pulses

are either invariable ITCP or vm-proportional VTCP, as illustrated

in Fig. 3a. The time counting pulses for the quantization of elapse

times are dependent on the velocity with which the AP propagate

along an axon. This velocity is in turn dependent on the "rest

potential" and on the concentration of Na+ flowing into the

intracellular space at the start of the depolarization process, as

soon as perception at the receptor cell causes an electric current

to influence the extra/intra-cellular ionic equilibrium.

With the commencement of stimulation of a receptor (at the outset of a perception), only capacitive current flows from the extra-cellular space into the intracellular fluid. This generates an "electrotonic potential" EP, which propagates passively. If this EP exceeds the threshold P1, then an AP, which propagates in a self-regenerating manner along the membrane districts, is produced. The greater the capacitive current still available after depolarisation (or "charge reversal") of the membrane capacitor, the greater the Na+ ion flow into the intracellular space, and the greater the available EP current that can flow into still undepolarized areas. The rate of further depolarization processes in the neuronal fibres, and consequently the propagation speeds of further AP's, are thus increased proportionally. The charge reversal time of the membrane capacitor is therefore the parameter that determines the value 12 of the resting potential P0. When a stimulus ("excitation") starts from the lowest resting potential 12, then the Na+ influx is the largest, the EP-rise is steepest and the electrotonic flux is maximum. If an AP is triggered, then its propagation speed is in this case also maximum. But when a receptor stimulus starts from a higher potential 12a, 12b, 12c...., then the Na+ influx is partially inactivated, and the steepness of the EP-rise as well as its electrotonic flux velocity is decreased. Therefore, the propagation speed of an AP decreases too.These specific properties are used in molecular/biologic organisms to produce either invariant time counting impulses ITCP, with periods tscan, or variable time counting impulses VTCP with periods t.vscan. In the latter case, the VTCP's are modulated in accordance with the relative speeds vm (via the STQ(v) parameters), and therefore have shorter intervals (see Figs. 3b, 3c). The STQ(v)-quantum is determined by the deviation of the respective starting-potential from the lowest resting-potential P0, which serves as a reference value, and is measured by the duration of the capacitive charging of a cell membrane when a stimulus occurs at the receptor.

The duration of the charging is inversely proportional to the

velocity of the Na+ influx through the membrane channels into the

intracellular space. A cell membrane can be understood as an

electric capacitor, in which two conducting media, the intracellular

and the extracellular solution, are separated from one another by

the non-conducting layer, the membrane. The two media contain

different distributions of Na/K/Cl ions. The greater the

"stimulation dynamics" (see below) that first influences the outer

molecular media - corresponding to sensor 2 in Fig.

2a - and, subsequently, the inner molecular media - which

corresponds to sensor 1 in Fig. 2a - the faster is

the Na+ influx and the shorter the charging time (which determines

the parameter for the relative speed vm), and the faster is the AP

propagation velocity v(ap) in the neighbouring membrane districts.

The signals at the inner and outer sides, respectively, of the

membrane, correspond to the signal amplitudes V and W. The velocity

v(ap), therefore, indirectly generates the invariant time counting

pulses ITCP or the variable vm-proportional time counting pulses

VTCP.

These variable VTCP pulses are self-adaptive modulated time pulses that are correlated to the relative length. As explained in the following (contrary to the traditional physical sense), no "invariant time" exists -- only "perceived time" exists. Of essential importance also is the difference between "stimulation intensity " whose measurement is determined by the AP frequency and therefore by the refractory period, and the "stimulation dynamics", whose measurement is defined by the charge duration of the cell membrane and therefore also by the speed of the Na+ influx. "Stimulation dynamics" is not the same as "increase of the stimulation intensity". It is a measure of the temporal/spatial variation of the position of the receptor relative to the position of the stimulus source, and therefore of the relative speed vm. The stimulation intensity corresponds to signal amplitudes, from which vm-adaptive STQ(d) elapse times Tδ(1,2,3...) are derived, while the stimulation dynamics is defined by the acquired STQ(v) parameters. `

Fig. 4b and Fig. 4c show the

analysis of STQ elapse times in a molecular/biological model in an

easily comprehensible manner. The results of the analysis are used

to generate redundancy-free auto-adaptive pattern recognition as

well as autonomous regulating and self-organization processes. The

organism in the particular example shown here is forced to

distinguish certain types of foreign bodies that press on its

"skin". It must reply with a fast muscle reflex when it recognizes a

pinprick. But it should ignore the stimulus when it recognizes a

blunt object. A continuous vm-adaptive recording of STQ(d) elapse

times by means of VTCP pulses is necessary to do this. The frequency

of these time counting impulses is modulated in accordance with the

STQ(v) parameters of the stimulus dynamics (vm). These STQ(v)

parameters are required for the recording of the STQ(d) elapse times

Tδ(1,2,3...) from the signal amplitude at the current stimulus

intensity. The difference between "stimulation intensity" and

"stimulation dynamics" is easily seen in this example. A stimulus

can even show a different intensity if no temporal-spatial change

takes place between signal source and receptor. A needle in the skin

can cause a different sensory pattern even when its position is not

changing if, for example, it is heated. This sensory pattern is

determined by the signal amplitude, and consequently by the AP

frequency and by the STQ(d) quanta. As long as the needle persists

in an invariant position, the AP propagation velocity is constant,

because the membrane charging time is constant too. During the prick

into the skin, there is a "dynamic stimulation", and the STQ(d)

quantization of the signal amplitude is carried out in a manner that

depends on the pricking speed vm. It should be noted that two

temporally displaced signal amplitudes (at the inner and outer

membrane surface) always exist during this dynamic process. The

STQ(v) parameters are derived from this. The AP propagation

velocities and the acquired STQ(d) time patterns are adapted

accordingly ("temporal auto-adaptation").

The STQ(d) time

patterns Tδ(1,2,3,4,.....), measured adaptively according to the vm,

are constantly compared to and analysed together with the previously

measured and stored STQ(d) time patterns Tδ'(1,2,3...). This time

comparation process occurs continuously in the so-called synapses,

which are the junctions to axional endings of other neurons. The

probability density values that are produced at the synapses, and

which are used to represent the convergence of both regression

curves, are communicated for further processing to peripheral neural

systems, or to muscle fibres in order to trigger motoric reflex.

Fig. 4b shows the vm-dependent propagation of an AP from a sensory neuron (receptor) 20 along an axon to a synapsis, where a comparison of acquired time sequences takes place through molecular" covariance analysis". This receptor functions like a "pressure sensor". If a needle 21 with a certain dynamics impinges on the outer side of the cell membrane, then this stimulation causes triggering of AP's 23 as described in Fig. 4a. The AP's propagate in the axon 22 with a STQ(v)-dependent speed vap. The sequence (a'.....v') represents the signal amplitude values that are produced by the pinprick. The sequence begins with the phase transition at the first threshold value P1, continues over P2, P3, P4 (at which point the stimulus maximum is attained), and finally to the phase transitions through P3 and P2. The intensity zones for stimulus perception are designated with Z1, Z2, Z3 and Z4. The periods t(P1), t(P2), t(P3), t(P4)......, and the magnitudes of the AP's serve to identify the particular threshold in which the stimulation intensity is currently to be found. Their temporal sequence is therefore a type of "code". AP's are not time counting pulses. Besides their coding function, they also serve as (indirect) activating and deactivating pulses for the recording of STQ(d) elapse times. The actual vm-dependent measurement of the STQ elapse times Td(1), Td(2), Td(3), Tw(4) and Td(4)... (see Fig. 2c), as well as the comparison of these with previously recorded elapse times, takes place in the synapse 24. At the presynaptic terminal of the axons, the AP's 23 arrive with variable velocities vm(n...), according to the dynamics of the needle prick as well as the measured STQ(v) parameters. This variable arrival velocity at the synapses is the key to producing the adaptive time counting impulses VTCP (see Fig. 3c) with vm-modulated frequency ƒscan. The synapse is separated from the postsynaptic membrane by the "synaptic cleft", and the postsynaptic membrane, for its part, is interconnected with other neurons; for instance, to a "motorneuron" 25. This neuron generates a so-called "excitatory postsynaptic potential" (ESPS) 27 that is approximately proportional to the convergence probability g. If this EPSP (or, equivalently, the probability density g) exceeds a certain threshold value, then, in turn, an action potential AP 28 is triggered. This AP is communicated via motoaxon 26 to the "neuromuscular junction", at which a muscle reflex is triggered. The incoming AP sequences 23 generate the release of particular amounts of molecular transmitter substance from their repositories - tiny spherical structures in the synapse, termed "vesicles". In principle, a synapse is a complex programmable timedata processor and analyzer that empties the contents of a vesicle into the presynaptic cleft when the recurrence of any prior recorded synaptic structure is confirmed within a newly recorded key sequence. The synaptic structures and vesicle motions are generated by the dynamics (vap) of the AP ionic flux, as well as by its frequency. AP influx velocities v(ap) correspond to the STQ(v) elapse times, and AP frequencies correspond to the STQ(d) elapse times. The transmitter substance is reabsorbed by the synapse, and reused later, whereby the cycle continues uninterrupted.

We now present a detailed description of Fig. 4b (referring

also to Figs. 4e and 4f). The ionic influx of the initial incoming

AP 23 (a') activates the spherical structures

(vesicles) containing the ACh transmitter molecules. These molecules

are released in the form of a "packet". The duration of this ACh

packaging depends on the dynamics (represented by the velocity

v(ap)) of the AP ionic influx at the presynaptic terminal, and

therefore on the stimulus dynamics (represented by vm) at the

receptor 20. Each subsequent incoming AP, namely

b', c'..., in turn causes neurotransmitter substances in the vesicle

to be released toward the synaptic cleft. Each of the following are

elapse time counting and covariance analyzing characteristics:: the

duration of accumulation of neurotransmitter substance T(t); the

velocities v(t) with which the neurotransmitter substances move in

the direction of the synaptic cleft; the effects induced by the

neurotransmitter substances at the synaptic lattice at the synaptic

cleft; the duration of pore opening; and so on. By means of AP's

acting on synaptic structures, not only are the actual time counting

frequencies ƒscan generated (to be used in vm-dependent measurement

of STQ(d) elapse times as described in Fig. 2c), but also time

patterns are stored and analysed.

If the pattern of a current temporal sequence is recognised by the synapse as matching an existing stored pattern, a pore opens at the synaptic lattice, and all of the neurotransmitter content of a vesicle is released into the subsynaptic cleft. The released transmitter molecules (mostly ACh) combine at the other side of the cleft with specific receptor molecules of the sub-synaptic membrane of the coupled neuron. Thus, a postsynaptic potential (EPSP) is generated, which then propagates to other synapses, dendrites, or to a "neuromuscular junction". If the EPSP exceeds a certain amplitude, then it triggers an action potential (AP) of the described type, which then triggers, for example, a muscle reflex. If the potential does not reach this threshold, then the EPSP propagates in the same manner as an EP (i.e. in an electrotonic manner); an AP is not produced in this case.

Of special significance is the summing property of the

subsynaptic membrane. This characteristic, termed "temporal

facility", results in the summation of amplitudes of the generated

EPSP's, if they arrive in short sequences within certain time

intervals. Each release of neurotransmitter molecules into the

synaptic cleft designates an increased probability density occurring

during the comparison of instantaneous vm-proportionally acquired

STQ time patterns to prior vm-proportionally recorded STQ-time

patterns. Increased probability density causes a higher frequency of

transmitter substance release and therefore a higher summation rate

of the EPSP's, which in turn produces, at a significantly increased

rate, postsynaptic action potentials (AP). Therefore, a postsynaptic

AP is effectively a confirmation signal that flags the fact that

isomorphism between a previously and currently recorded time data

pattern has been recognized. On the basis of this time pattern

comparison, the object that caused the perception at the receptor

cell is thereby identified as "needle"; and the command to "trigger

a muscle reflex" is conveyed to the corresponding muscle fibres.

Parallel and more exact recognition processes are executed by the

central nervous system CNS (i.e. the brain). From the sensitive

skin-receptor neuron 20, a further axonal branching

29 is connected via a synapse 30

to a "CNS neuron". In contrast to the "motorneuron" which actuates

the motoric activity of the organism directly, a CNS neuron serves

for the conscious recognition of a receptoric stimulation sequence.

An AP 31, produced at the postsynaptic cell

membrane 30, can spread out along dendrites in the

axon 30a, as well as to several other CNS neurons;

or, alternatively, indirectly via CNS neurons to a motorneuron, then

on to a neuromuscular junction.

The parameters controlling

the recording of STQ time quanta in the synapses 25

and 30 can differ with different synaptic

structures. (Indeed, the synaptic structures themselves are

generated by continuous "learning" processes). This explains how it

is possible for a needle prick to be registered by the brain, while

eliciting no muscular response; or how a fast muscle reflex can be

produced while a cause is hardly perceived by the brain. The first

case shows a conscious reflex, the other case an instinctive reflex.

The former occurs when the CNS synapse 30 cannot

find enough isomorphic structures (in contrast to the synapse

25), transmitter molecules are not released with

sufficient frequency, and subsequently no postsynaptic AP 31 and no

conscious recognition of the perceived stimulus can take place.

Numerous functions of the central nervous system can be explained in

such a monistic way; as well as phenomena such as "consciousness"

and "subconscious". Generally, auto-adaptive processes are deeply

interlaced in organisms, and are therefore extremely complex. In

order to be capable of distinguishing a needle prick from the

pressure of a blunt eraser, essentially more time patterns are

necessary; in addition, more receptors and synapses must be involved

in the recognition process.

Fig. 4c

illustrates the process by which moderate pressure from a blunt

object (e.g. a conical eraser on a pin) is recognized, resulting in

no muscle reflex. The blunt object 32 presses down

with a certain relative velocity vm onto a series of receptors in

neural skin cells 33, 34, 35, 36 and 37.

Several sequences of AP's 39, 40, 41, 42 and

43 are produced after the individual adjacent

receptors (see also Fig. 4b) are stimulated. These action potentials

propagate along the collateral axons 38 with

variable periods t(P1,2,3..) and velocities vap(1..5), which result

on the one hand from the prevailing stimulation intensity, and on

the other hand from the respective stimulation dynamics. Since each

receptor stimulus generates a different pattern of STQ(v) and STQ(d)

quanta, various AP sequences a'.....m' emerge from each axon. All

sequences taken together represent the pattern of STQ elapse times

which characterises the pressure of the eraser on the skin. These

variable AP ionic fluxes reach the synapses 44, 45, 46, 47

and 48, which are interconnected via the synaptic

cleft with the motoneuron 49. As soon as the

currently acquired STQ time data pattern shows a similarity to a

prior recorded STQ time data pattern, each individual synapse

releases the contents of a vesicle into the subsynaptic cleft.

Simultaneously, this produces an EPSP at the subsynaptic membrane of

the neuron. These EPSP potentials are mostly below the threshold.

The required threshold value for the release of an AP is reached

only when a number of EPSP's are summed. This happens only when a

so-called "temporal facilitation" of such potentials occurs, as

described in the previous paragraph.

In the model shown, the

individual EPSP's 50, 51, 52, 53 and 54

effect this summing property of the subsynaptic membrane. These

potentials correspond to receptor-specific probability density

parameters g1, g2, g3, g4 and g5, that represent the degree of

isomorphity of time patterns. Simultaneous neurotransmitter release

in several synapses, for example in 45 and

47, causes particular EPSP's to be summed to a total

potential 56, which represents the sum of the

particular probability densities G = g1+g3. This property of the

neurons (i.e. the summing of spatially separated subliminal EPSP's

when release of neurotransmitter substance appears simultaneously at

a number of parallel synapses on the same subsynaptic membrane) is

termed "spatial facilitation".

In the described model case,

the summed EPSP 56 does not, however, reach the

marked threshold (gt), and therefore no AP is produced. Instead, the

EPSP propagates in the sub-synaptic membrane region 49

of the neuron, or in the following motoaxon 55,

respectively, as a passive electrotonic potential (EP). Such an EP

attenuates (in contrast to a self-generating active AP) a few

millimetres along the axon, and therefore has no activating

influence on the neuromuscular junction, and consequently no

activating influence on the muscle. The stimulation of the skin by

pressing with the eraser is therefore not sufficient to evoke a

muscle reflex.

It would be a different occurance if the eraser

would break off and the empty pin meet the skin receptors with full

force. In this case, neurotransmitter substances would be released

simultaneously in all five synapses 50, 51, 52, 53

and 54, because the acquired STQ time patterns

Tδ(1,2,3..), with very high probability, would be similar to those

STQ time patterns Tδ'(1,2,3... ) already stored in the synaptic

structures that pertain to the event "needle prick". The EPSP's

would be summed, because of their temporal and spatial

"facilitation", to a supraliminal EPSP 56, and a

postsynaptic AP would be produced that propagates along the motoaxon

55 in a self-regenerating manner (without temporal

and spatial attenuation) up to the muscle, producing a muscle

reflex.

As in Fig. 4b, in the present example a recognition process takes place in the central nervous system (CNS) that proceeds in parallel. From the skin receptor cells 33, 34, 35, 36 and 37, collateral axonal branches extend to CNS synapses that are connected to other neurons 58. Such branches are termed "divergences". The subdivision of axons into collateral branches in different neural CNS districts, and the temporal and spatial combination of many postsynaptic EPSP's, allows conscious recognition of complex perceptions in the brain (for example, the fact of an eraser pressing onto the skin). Since this recognition has to take place independent of the production of a muscle reflex, the sum of individual EPSP's must be supraliminal in the CNS. Otherwise, no postsynaptic AP - i.e. no signal of confirmation - can be produced. As an essential prerequisite for this, it is necessary that auto-adaptive processes have already occurred which have formed certain pre-synaptic and sub-synaptic STQ time structures in the parallel synapses 58. These structures hold information (time sequences; i.e. patterns) pertaining to similar sensory experiences (e.g. "objects impinging on the skin" - amongst these, a conical eraser). Obviously the threshold for causing an AP in the postsynaptic membrane structure of the ZNS Neurons 58 (and therefore also in the brain) has to be lower than in the motoneuron membrane 49 described previously. Therefore also the sum of these EPSP's must be larger than the sum of the EPSP's g1, g2, g3, g4 and g5. Isomorphisms of STQ time patterns in the CNS synapses of the brain have to be more precisely marked out than those in the synapses of motoneurons, which are only responsible for muscle reflexes

The structure of the CNS synapses must be able to discern finer

information, so it must be more subtle. The production of a

sub-synaptic AP represents a confirmation of the fact that a

currently acquired Tδ(1,2,3...) time pattern is virtually isomorphic

to a prior recorded reference time pattern Tδ'(1,2,3...), which, for

example, arose from a former sensory experience with an eraser

impinging at a certain location on the skin. If such a former

experience has not taken place, the consciousness has no physical

basis for the recognition, since the basis for time pattern

comparison is missing. In such a case, therefore, a learning process